AI Signal vs Noise Framework is now a daily leadership skill. Not because AI is “magic,” but because the volume of AI announcements is growing faster than most teams can evaluate.

This article introduces the AI Signal vs Noise Framework — a practical, decision-first approach designed for product managers, tech leaders, and senior engineers navigating rapid AI change.

If you lead product, engineering, or a business function, you’ve likely felt this pattern:

A new model launches.

A competitor posts a demo.

A board member asks, “What’s our AI plan?”

Your team starts debating tools instead of outcomes.

AI Signal vs Noise Framework for Product and Tech Leaders

The AI Signal vs Noise Framework helps leaders distinguish meaningful AI progress from surface-level hype by focusing on evidence, baseline impact, and workflow relevance rather than announcements.

This article gives you a practical, repeatable way to separate real AI signals from AI noise—without panic, without hype, and without wasting cycles.

1) Why AI noise is increasing

AI noise is increasing for a few predictable reasons.

The incentives reward attention, not accuracy

Most AI updates are marketed like product launches, not evaluated like operational change. Short demos spread faster than careful evidence.

Tooling is easy to copy; differentiation is hard

When many teams can access similar models and frameworks, announcements shift from “capability” to “storytelling.”

Progress is real, but uneven

Some improvements are foundational (cost, latency, reliability). Others are narrow (a benchmark spike, a polished demo). Both get announced with the same urgency.

Everyone is searching for certainty

In uncertain markets, people look for a clear narrative. “AI will replace everything” is a clean narrative. It’s also usually not helpful.

The result: leaders get flooded with updates that feel important, but don’t translate into decisions.

This widening gap between announcements and outcomes explains the growing tension between AI hype vs reality. While headlines move fast, real product and technology decisions require evidence, baselines, and operational context.

2) What qualifies as a real signal

A real AI signal is any change that can reliably affect one of these:

- Unit economics (cost per task, cost per decision, cost per workflow)

- Capability (what becomes possible with acceptable quality)

- Reliability (predictability, uptime, error rates, reproducibility)

- Time-to-value (how quickly teams can ship and learn)

- Risk profile (privacy, compliance, security, governance)

A useful signal is also actionable: it changes what you should do next quarter, not what you should tweet today.

To ground your evaluation in a stable reference, borrow language from established risk thinking (for example, NIST’s AI RMF emphasizes governing, mapping, measuring, and managing AI risks).

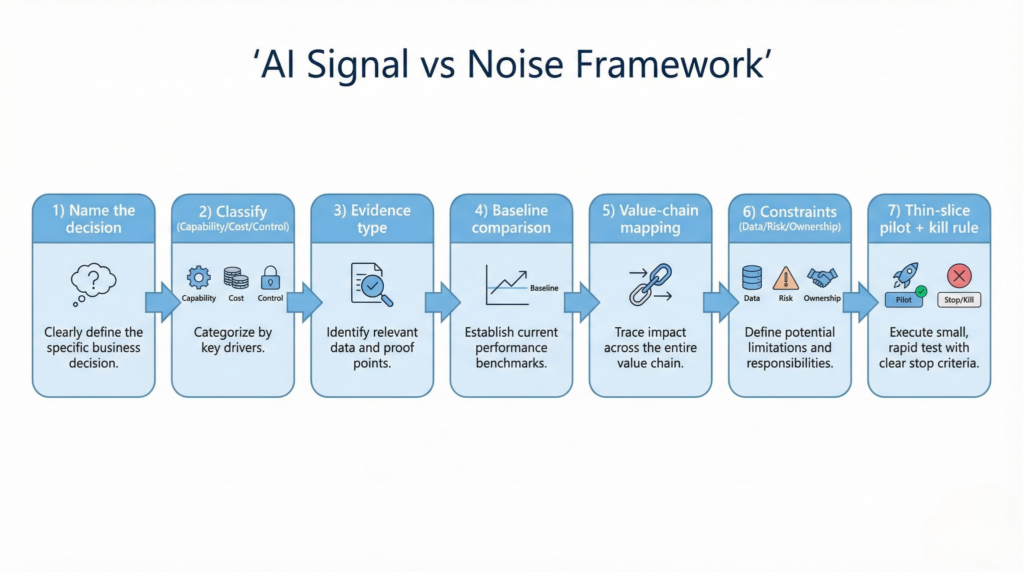

3) The AI Signal vs Noise Framework (step-by-step)

The AI Signal vs Noise Framework is intentionally simple. It does not predict the future of AI. Instead, it helps leaders evaluate whether an AI update should change a real product, engineering, or business decision.

Rather than predicting trends, this approach functions as an AI decision-making framework that helps leaders decide when to act, when to test, and when to wait.

Use this framework in a 30–60 minute leadership review. It’s designed to be repeatable, not clever.

Step 1: Name the decision you’re trying to improve

AI doesn’t “add value.” It improves a specific decision or workflow.

Write one sentence:

- “We want to reduce support resolution time without reducing CSAT.”

- “We want to speed up QA triage while keeping defect escape rate flat.”

- “We want to improve lead qualification accuracy.”

If you can’t name the decision, you’re not ready to evaluate tools.

Step 2: Classify the update (Capability, Cost, or Control)

Most “AI breakthroughs” are one of three things:

- Capability: new tasks become possible

- Cost: the same task becomes cheaper/faster

- Control: governance, security, deployment, monitoring improves

Signals often come from cost and control, not just capability. (Leaders frequently miss this.)

Step 3: Demand the evidence type (not the claim)

Ask: what kind of proof is being offered?

- A benchmark?

- A staged demo?

- A production case study?

- A measurable before/after in a real workflow?

Then apply a simple rule:

The closer the proof is to your workflow, the stronger the signal.

Step 4: Compare to a baseline, not to “the future”

Noise compares to a fantasy: “Soon everything will be automated.”

Signals compare to a baseline:

- What did it cost last quarter?

- What accuracy did we accept last quarter?

- What failure modes did we already have?

If the “breakthrough” doesn’t move your baseline meaningfully, treat it as noise.

Step 5: Map it to your value chain (where it actually touches work)

Pick one place it could land:

- Discovery (research synthesis, tagging, insights)

- Delivery (coding support, test generation, incident summaries)

- Operations (support triage, finance ops, HR workflows)

- Customer experience (search, recommendations, assistance)

If you can’t map it to a workflow, it’s not a product decision yet.

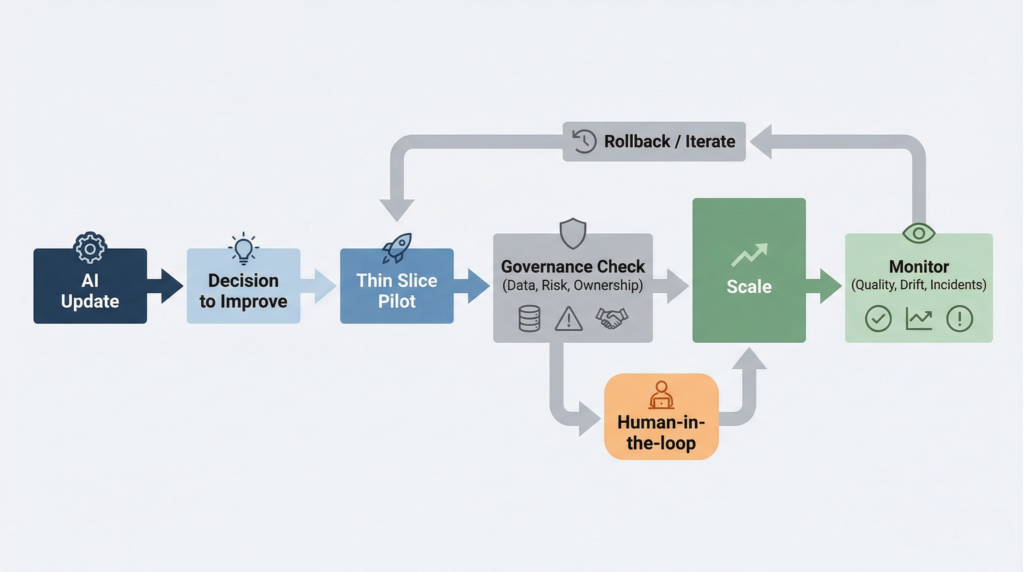

Step 6: Check constraints (Data, Risk, and Ownership)

Most AI projects fail here, not in model selection.

- Data: Do we have the inputs? Are they clean enough?

- Risk: What is the harm if it is wrong?

- Ownership: Who owns quality, monitoring, and rollback?

This is where governance frameworks matter, especially for customer-facing or regulated use cases. NIST+1

Step 7: Run a “thin slice” test with a kill rule

Create a small pilot that is:

- Narrow scope

- Clear metric

- Human-in-the-loop

- Easy to turn off

Add a kill rule upfront:

“If we don’t beat baseline by X in Y weeks, we stop.”

This protects focus and makes the evaluation non-emotional.

4) Applying the framework to real examples

Below are realistic examples you can reuse in leadership discussions.

Example A: “New model has higher benchmark scores”

- Classify: capability (maybe)

- Evidence: benchmark (weak unless it mirrors your workflow)

- Baseline check: does it improve your error types?

- Value chain: maybe helps summarization, classification, support drafting

- Constraint check: reliability and evaluation matter more than a single score

Signal test: run a thin slice on your hardest 50 cases (not average cases).

Example B: “Tool integration makes AI usable inside workflows”

Often this is a bigger signal than a model upgrade.

- Classify: control + time-to-value

- Evidence: internal adoption + time saved

- Value chain: delivery and operations improve first

- Constraint check: permissions, logging, privacy, monitoring

McKinsey has repeatedly highlighted that value comes from integrating AI into real operating practices, not from pilots that stay isolated. McKinsey & Company+1

Example C: “AI agents will replace roles”

- Classify: narrative (usually noise)

- Evidence: demos and anecdotes

- Baseline check: most org work is constrained by data access, approvals, and accountability

- Constraint check: risk and ownership make “replacement” a high bar

A calmer interpretation: agents can be strong for internal process acceleration when scope and controls are clear. Harvard Business Review

Example D: “AI Index says adoption is growing”

This is a strategic signal—but not a product roadmap by itself.

Use reports like Stanford’s AI Index to understand macro trends, cost curves, and adoption patterns. Then translate to your context. Stanford HAI

5) Common mistakes leaders make

Mistake 1: Confusing awareness with readiness

Knowing what’s happening is not the same as being ready to implement.

Mistake 2: Starting with tools instead of decisions

Tool-first thinking creates scattered experiments and weak ROI.

Mistake 3: Over-optimizing for the “best model”

Most teams get more value from:

- better prompts and guardrails

- clear evaluation sets

- workflow integration

- monitoring and feedback loops

Mistake 4: Ignoring operational risk until late

Risk isn’t a checkbox at launch. It’s a system you run continuously. NIST

Mistake 5: Treating AI as a single initiative

AI isn’t one project. It’s a capability that will touch many workflows—each with different ROI and risk.

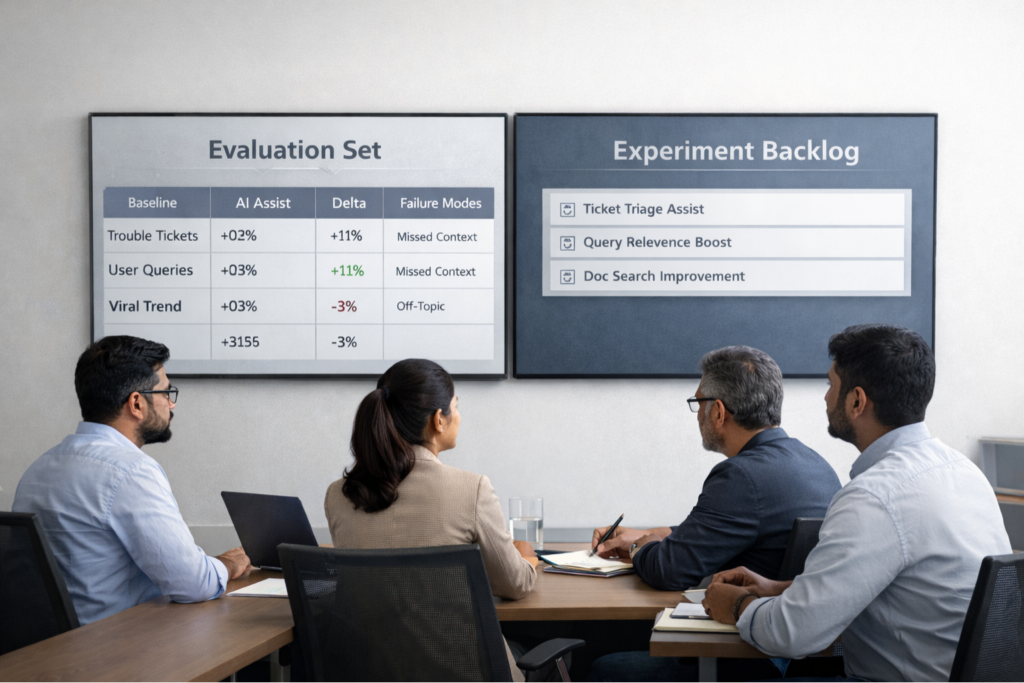

6) How product teams should use this framework

Product teams should turn AI evaluation into a product discipline, not a reaction.

For modern teams, this approach becomes a practical AI strategy for product managers—one that prioritizes measurable outcomes, fast learning cycles, and user impact over experimental hype.

Build an “AI Decision Review” into discovery

Once per sprint (or every two weeks), review:

- 3–5 external updates

- 1–2 internal workflow pain points

- 1 metric you want to move

Use the 7 steps above to decide what to ignore and what to test.

Maintain a small “evaluation set”

Create a stable set of:

- tricky customer tickets

- ambiguous user queries

- edge-case documents

- representative workflows

This prevents “demo-driven development” and makes progress measurable.

Treat AI features like any other product feature

Define:

- user outcome

- success metric

- failure mode

- support plan

- rollback plan

Use internal content as leverage

If your organization already documents product decisions, support flows, architecture, or policies, you have an advantage: AI can amplify well-structured knowledge.

For related thinking on product strategy under AI pressure, these internal reads are relevant:

- “AI Signals for Leaders” Vatsal Shah

- “Latest AI Model Updates: 7 Smart Changes for Leaders” Vatsal Shah

- “Product Growth Strategy in the Age of AI” Vatsal Shah

7) How leaders should use this framework

Leaders who consistently apply the AI Signal vs Noise Framework build stronger judgment over time, enabling teams to move faster without increasing operational or reputational risk.

Leaders should use AI to improve decision quality, not just output volume.

Adopt a simple leadership posture: calm, curious, accountable

- Calm: don’t let headlines set strategy

- Curious: test quickly, learn honestly

- Accountable: keep ownership human, even when execution is assisted

HBR has written about AI creating real tensions in work (speed vs safety, autonomy vs accountability). Naming those tensions early helps teams make better choices. Harvard Business Review+1

Viewed this way, responsible AI adoption for tech leaders is less about speed and more about sequencing—starting with low-risk wins before scaling into core systems.

Build a portfolio, not a bet

Separate work into three lanes:

- Operational wins (low risk, clear ROI)

- Product differentiation (medium risk, strategic upside)

- Exploration (time-boxed learning)

Most organizations should invest heavily in lane 1 first.

Make governance visible and lightweight

Good governance is not bureaucracy. It is:

- clear rules for data access

- clear ownership for monitoring

- clear escalation paths

- clear auditability

This aligns well with established AI risk management practices. NIST Publications+1

Focus on compounding advantage

The biggest advantage is not “using AI first.”

It’s learning faster and integrating better.

8) Closing reflection

AI news will keep accelerating. Some of it will matter. Much of it won’t.

Your job as a product or tech leader is not to chase updates. It’s to build a decision system that stays calm under change.

When you apply the AI Signal vs Noise Framework consistently, something shifts:

- your roadmap becomes clearer

- your experiments become sharper

- your team stops reacting and starts learning

And that’s what strong leadership looks like during a technology transition.

Frequently Asked Questions

What is an AI signal vs noise framework?

An AI signal vs noise framework is a repeatable method to evaluate AI updates by evidence, baseline impact, workflow fit, constraints, and measurable outcomes—so leaders act on useful changes and ignore hype.

How do product teams avoid AI hype?

Product teams avoid AI hype by starting from a decision or workflow, using stable evaluation sets, running thin-slice pilots, and measuring impact against a baseline before scaling.

What is the fastest way to test an AI update safely?

The fastest safe approach is a narrow pilot with human review, clear success metrics, and a kill rule—so you can learn quickly without creating operational risk.